While testing the python kafka package and its associated KafkaProducer class, I found that events were “lost”; i.e., they were never successfully persisted in the destination topic. Various blogs suggest setting a sleep(2) call, or whatever, which seemed odd. I ran strace against the python instance, and found a couple of interesting things:

- A few events were sent (via the sendto() system call) at a time

- Not all events were sent

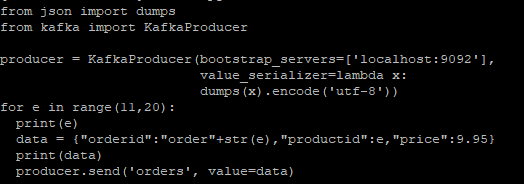

I ended up finding the flush() call, which successfully persisted the events to the topic.

I also found that queue.buffering.max.ms controls how often the internal queue waits prior to writing to the topic. batch.num.messages can also be used to control how often events are sent to the broker for persistence.

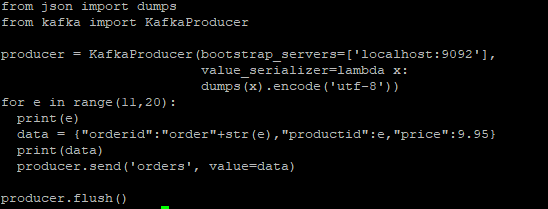

As such, the code below will most likely send no events to the broker, as a loop of ten will probably finish before either parameter above is tripped.

However, adding a flush() call at the end will persist the events.